Building a Documentation MCP Server for Vimeo Developers

I watched the AI assistant confidently write code using a deprecated component that was removed from the codebase six months ago and hallucinate methods that never existed in our custom ORM. If you’ve worked with AI coding assistants on a real codebase with hundreds or thousands of contributors, then you’ve experienced this pain. The usefulness of AI assisted coding goes out the window on large, highly complex codebases

This was my daily reality at Vimeo.

The Evolution of The Vibes

I had been using Windsurf for a few weeks at this point, but the LLM-assisted code-writing basically stopped at being a fancy autocomplete. After reading into some documentation for Windsurf, I saw it supported context files that you could keep globally or at the project-level. It promised to be a great way to deliver a set of protocols for working, thinking through tasks, how to write code, repeated tasks, etc.

And, after creating a thorough context file, I began to see huge differences in the quality of output with Cascade (Windsurf IDE’s Agent). The next leap in productivity arrived when I discovered MCP and tool-calls. Servers like filesystem, sequential-thinking, memory , when paired with well-defined context files wound up being the secret sauce to production-level vibe coding. From these experiments I also developed a highly reliable spec-driven workflow. A standard that has been integrated into many LLM clients now by default. e.g. “Planning Mode” in Windsurf.

“Overnight my role flipped from engineer to project manager detailing features and technical specifications.”

Now I’d developed a spec-driven approach and a habit of creating highly-detailed context files to help the LLM understand best practices when it came to writing code within the Vimeo codebase. I was finally producing good results but how could I share this with the team?

The Problem: Why LLMs Struggle with Custom Codebases

Vimeo’s platform has a lot of custom patterns and conventions that take a lot of experience to understand and LLMs lacked the training data or capability to follow the complexities of the codebase.

When writing frontend features, Cascade didn’t understand how to use our SWR-like hooks client to make client-side API requests, and it wouldn’t use our Chakra-based UI library for components, instead opting to use deprecated components or writing entirely new implementations. Within the PHP backend, writing tests and working within our in-house built ORM was a chore - Cascade often leaned hard into its trained data and regularly hallucinated methods and patterns that simply didn’t exist in our codebase.

I was driven to make my context documents and AI coding more accessible to others, but the practicality of sharing these context files was surprisingly difficult. Should they be committed to the repository? But what if someone has bespoke instructions that they’d rather have included in the documentation? The other issue was that my context files were exposed in a way only the Windsurf client would understand. Our Engineers were using a variety of different code IDEs with integrated LLMs - how could I provide the documentation in a way that could be ingested by them all?

I started looking into MCP (Model Context Protocol) as the means to both centralize and deliver the documentation, and developers’ bespoke instructions could still be kept in local context files or applied in prompting. I fired up Windsurf and broke ground on the first commit for my server that would be central hub for reading development guidelines and retrieving code samples - a hub that anyone in the organization could contribute to.

The Solution: An MCP Server for Internal Knowledge

MCP (Model Context Protocol) is the standard way for AI assistants to access external tools and data. The MCP server would act as a library of guides for how to work in certain areas of the codebase - common conventions, coding patterns, testing, how and why things work. The LLM could decipher the correct documentation to add to its context based on the repository of execution and the nature of the user’s prompt.

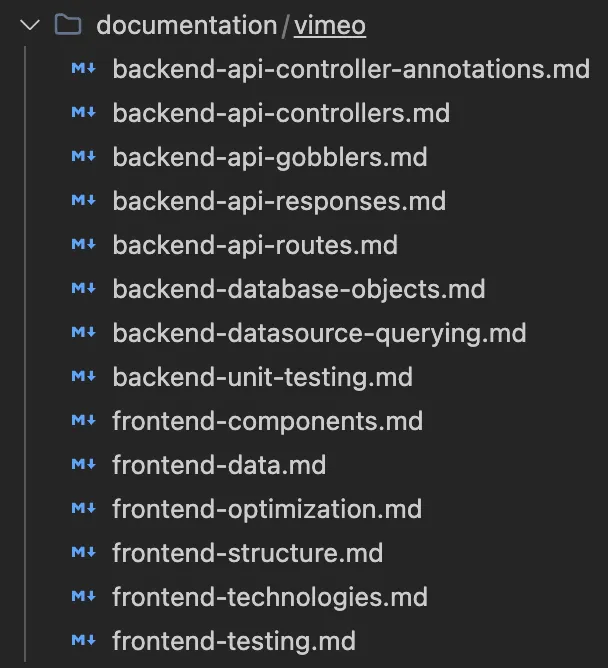

The documentation was stored statically on the server itself as markdown files, organized in a documents/ folder by <code-repository>/<the-discipline>.md - for example, vimeo/frontend-testing.md and vimeo/backend-api-controllers.md.

The guides themselves were created from a combination of existing documentation, common coding pitfalls I’d experienced, and directed LLM analysis of relevant files and patterns for particular areas and disciplines.

The Three-Iteration Journey: A Comedy of Errors

I was familiar with the MCP protocol in that I’d used it a number of times and understood it conceptually. However, it was initially daunting to try and implement the protocol myself - even using Anthropic’s SDK. This was my first attempt at building a an MCP server myself.

First Attempt: Framework Frustration

I initially opted to use an MCP framework I’d found that was gaining some popularity - I assumed that would be fine for getting my idea up and running for quick validation. But time and time again I could not get LLM clients to get results from the tool call.

After a week of debugging and growing frustration, I was ready to give up on the framework approach.

Second Attempt: Same Problem, Different Framework

Frustrated, I scrapped it for another framework, thinking (incorrectly) that the initial framework was doing something wrong -and that the level of abstraction was not configurable enough for my needs. However, with the second framework I was again met with the same problem.

This led me to believe that I needed to work with the core protocol, not someone else’s abstractions, in order to understand and properly debug any issues.

Third Attempt: Going Direct

My fears of working with the protocol directly were unfounded, and I found it straightforward to port my previous work to the raw protocol. But when I went to test in Claude, it failed again. The same error.

What was I missing?

The Moment Everything Clicked: Resources vs Tools

My initial approach for document discoverability was to expose the files as Resources. “A resource in MCP allows servers to share data that provides context to language models, such as files, database schemas, or application-specific information.”

Since I was working with a set of static files, a resource list felt like the correct way to solicit discoverability of the documents by the LLM agent. Upon discovering the required documents, it could fetch their contents by the relevant URI.

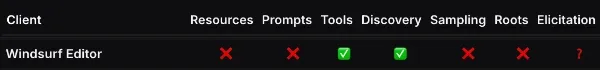

Yet it wasn’t until my third iteration of the server that I started to discover the problem. Even though Resources were a part of the protocol, no clients were currently supporting it. At the time, neither Windsurf nor Claude Desktop supported the Resource feature of the protocol (though Claude Desktop does now).

“So maybe there wasn’t anything wrong with those frameworks…,” I thought. Oh well.

Tools Acting Like Resources

So I returned to the drawing board to figure out how I could use the Tool feature to the same effect. I went down a bit of a rabbit hole implementing Natural Language Processing query tool to retrieve the best matching documentation for the language in the prompt - and though it worked OK, it left a bit to be desired if the prompt was not descriptive enough or had an overlap with multiple documents. It would need a lot of tinkering. This didn’t feel sustainable if the goal would be to continue to grow this library.

So I arrived at a rather simple conclusion: What if I had a Tool that acted like a Resource List?

Calling the tool would provide a list of document descriptions and an accompanying URIs. The LLM would then use its agency to lookup the best documents with a second tool that fetched content by URI. This approach trumped all in simplicity, maintainability and effectiveness. Consequently, I learned later this is similar to Context7’s approach, a popular context-providing MCP tool.

mcpServer.setRequestHandler(CallToolRequestSchema, async (request) => {

if (request.params.name === 'list-documents') {

const docs = await (new DocumentIndexer()).indexDocuments()

return {

content: [

{

type: "text",

mimeType: "application/json",

text: JSON.stringify(docs),

},

],

}

}

if (request.params.name === 'get-document') {

if (!request.params.arguments?.uri) {

throw new Error('uri is required')

}

const content = getDoc(request.params.arguments?.uri as string)

return {

content: [

{

type: "text",

mimeType: "text/markdown",

text: content,

},

],

}

}

throw new Error('Tool call failed.')

})Pretty straightforward, list-documents uses DocumentIndexer to parse and list all the documentation present in the /documentation folder; get-docmument gets the requested document by URI and returns it as markdown.

Real Impact: 75% Better Code Quality

In use, I prompt the LLM to work on some feature and “…refer to vimeo-dev-docs for the most relevant guidelines and best practices regarding [the feature].” I’ve never seen it pull incorrect documentation. Though I have no hard metric to point to, I feel the quality of the code using the tool is 75% better than without it.

It doesn’t mean it’s autonomously working or bug-free by any means - but it substantively increases my confidence in the code it produces and decreases the number of mistakes I have to fix myself. The improvements show up as:

-

Better adherence to our coding patterns and conventions

-

Fewer bugs in the output

-

More maintainable code structure

-

Correct use of our internal libraries and tools

-

Faster (and correct) generation of test suites for new features

Organizational Adoption

The server is housed as a repository within the Vimeo GitHub organization so contributing documentation is as simple as adding new markdown files to the static files directory. I’ve pitched it across a few Slack channels and folks who’ve used it have given it high-praise. I’m unsure how many people are actually using it, but I intend to find other avenues for letting people know about it.

Key Lessons Learned

1. Don’t Always Reach for the Framework

It seems simpler and abstracts away the messy parts, but most times you have to intimately understand the messy parts to appreciate the abstraction. In the case of MCP - the protocol is fairly straightforward and Anthropic’s SDK is good. The most difficult aspect is getting the response JSON shape to be acceptable to the protocol.

2. Use the Protocol’s Inspector Tool

It’s a quick and easy way to test your server’s output, before having the make the jump to installing it into your LLM client.

3. Check What Clients Actually Support

My biggest stumbling block was assuming that because Resources were part of the MCP protocol, they were universally supported. Always verify implementation support across your target clients.

Looking Forward: The Bigger Vision

I think the biggest improvement I could make would be to dockerize the server and have it boot up along with the other containers in our development environment. This would really improve its legitimacy and visibility within the organization, not to mention ease to implement for the end-developer.

In the future it might be nice to implement some sort of oversight for the quality and maintenance of the documentation - I would consider peer review of new documentation, as well as an established group in charge of updating existing documentation. Maybe there’s some crazy future where an automation can be in charge of analyzing code areas and disciplines and making suggesting document updates to the humans in the maintenance group.

But a document-provider is just the tip of the iceberg for the kind of functionality that could be dreamed up to improve and speed up employee workflows. And, it doesn’t stop with engineering teams. You can create internal tools to support all departments within a company.

MCP servers are already the de-facto way for an LLM to interact with any third-party service or external knowledge. This pattern of domain-specific MCP servers for internal tooling is something other companies should absolutely consider adopting.

Your Turn: Getting Started

If you’re struggling with AI assistants that don’t understand your codebase, start simple: identify your three biggest pain points, document the correct patterns, and build a basic MCP tool to share that knowledge. The protocol is more approachable than you think, and the productivity gains are transformative.

The transformation from individual vibe-coding to systematic, tool-enhanced AI development has been remarkable. What started as personal productivity experiments has evolved into organizational infrastructure that democratizes both AI-assisted development and institutional knowledge sharing.

Take a look at a stripped down base version of the dev-docs-mcp. Feel free to use it as a starter for your own development documentation MCP server!

Subscribe to my newsletter for tips, quips and updates on my latest projects.

I promise I won't spam you.